Why radical moderates build stronger boards

Posted on 11 Nov 2025

I’ve seen what happens when fear of conflict wins out over taking a principled stand.

Posted on 10 Nov 2025

By Simon Waller

SIMON WALLER on "The future of radical moderation" in Radical Moderate.

The propensity for artificial intelligence (AI) to supercharge extremist views means the moderating influence of community life guided by human connection has never been more important, says futurist SIMON WALLER.

Once, long ago I was told the definition of an economist is ‘someone who will tell you tomorrow why the prediction they made yesterday didn't happen today’.

Clearly this is a little tongue in cheek, but even still, unlike economists, futurists tend to avoid making predictions altogether. Mostly I believe it’s because we are acutely aware of how uncertain the future really is.

That being said, there are patterns from the past that can certainly provide indicators of the direction the future might take. Sadly, when it comes to artificial intelligence (AI), those patterns aren’t necessarily that reassuring.

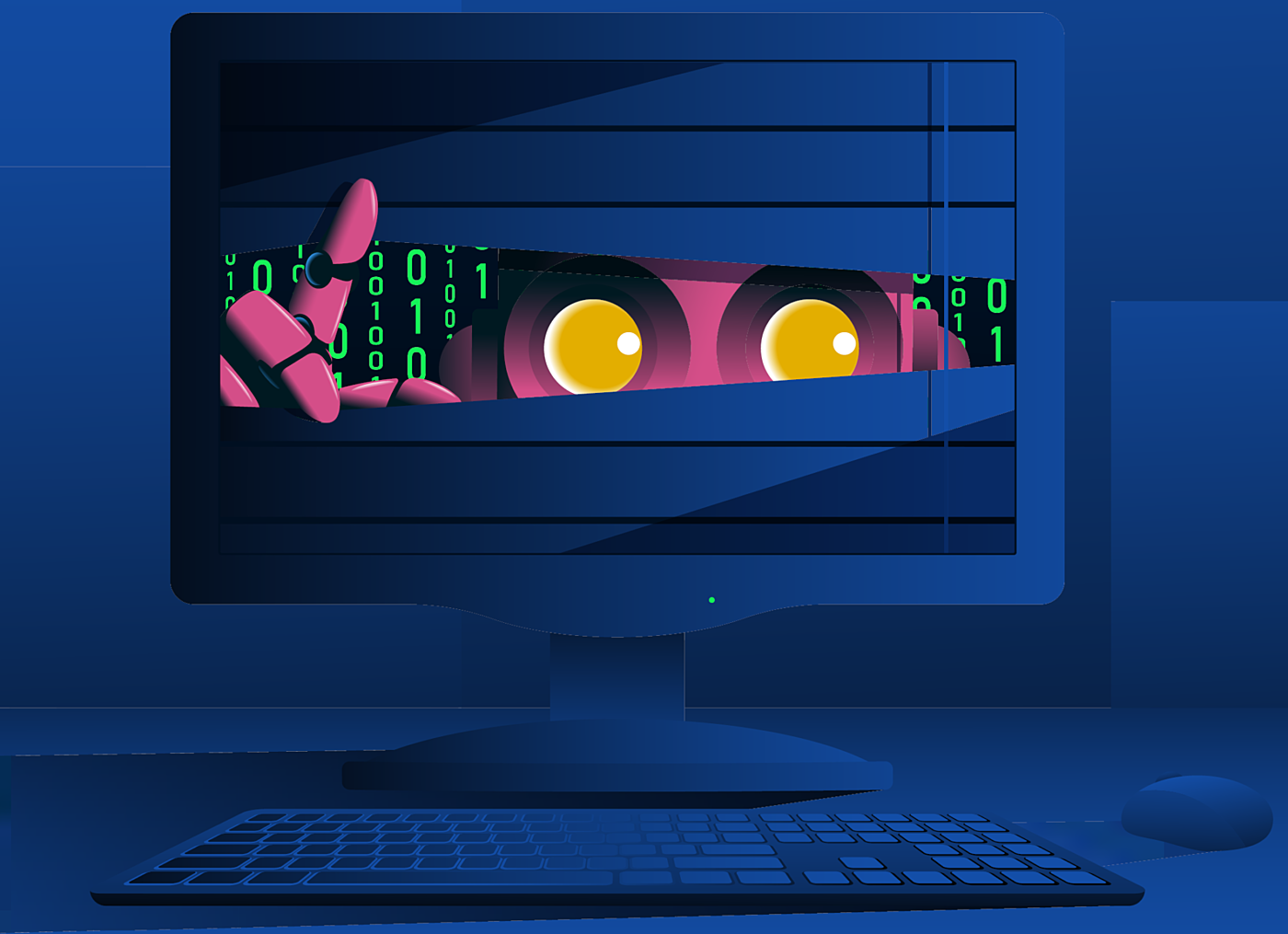

Although we tend to anthropomorphise technology and give it human attributes (such as calling it "intelligence") this comparison is not always helpful. One way in which it’s entirely unhelpful is when we think about agency and motivation. A human has its own agency, makes its own decisions and acts towards its own self-interests.

AI’s agency, on the other hand, is controlled entirely by the technology company that sits behind it. Ultimately, the words generated by ChatGPT are dictated by OpenAI, Copilot does the bidding of Microsoft and Llama responses are shaped by the whims of Meta.

So, if we want to understand the output of AI, we really need to understand the underlying motivations of the organisations that control it. When we look at AI through this lens, there are some good reasons to be concerned about the level of balance and moderation of its output.

First, the dominant business model of many of these tech companies is dictated by advertising, which in turn is dictated by "time on site" or other such metrics that focus on keeping users engaged for extended periods of time. It’s now well understood that platforms such as Facebook and YouTube that are driven by these types of metrics tend towards more extreme content (www.wbs.ac.uk/news/

how-social-media-platforms-fuel-extreme-opinions-andhate-speech/).

"Sadly, we live in a society where outrage and conflict is more compelling than compassion, and it is easy to imagine a world where the output of AI models is skewed towards extremism as a way of keeping users engaged longer."

Sadly, we live in a society where outrage and conflict is more compelling than compassion, and it is easy to imagine a world where the output of AI models is skewed towards extremism as a way of keeping users engaged longer.

Second, the content these platforms are trained on is not without bias. Although current court cases are revealing that Meta has trained its AI on whole libraries of pirated books (https://theconversation.com/meta-allegedlyused-pirated-books-to-train-ai-australian-authorshave-objected-but-us-courts-may-decide-if-this-isfair-use-253105), research papers and pirated material, the simplest and easiest information for technology companies to access is what is published openly on the internet and social platforms. In fact, platforms such as Reddit are now licensing all their user generated content to big tech for the training of AI (https://arstechnica.com/ai/2024/02/reddit-has-already-booked-203m-in-revenue-licensing-data-for-ai-training/)

However, if we look at who is it that is most active when it comes to creating information and posting it on the internet and on social media sites we find a strong bias towards young to middle-aged white men (https://khoros.com/resources/social-media-demographics-guide). So much of the diversity of opinion and perspective that exists in the real world is missing online. And as the saying goes, what goes in is what comes out.

The AIs don’t have the ability to discern what is fact and what is fiction; they are there to predict the next most likely word in a sequence. They cannot provide balance and moderation unless that balance and moderation already exists in the underlying data.

Finally, whereas traditionally technology companies have been philosophically aligned to progressivism and liberal ideals, this has now been challenged. During his first term of office, US President Donald Trump openly criticised big tech as being biased against right-wing views and even went so far as to develop Truth Social, his own social network (https://apnews.com/article/truth-social-donaldtrump-djt-ipo-digital-world-7437d5dcc491a1459a078195ae547987), after he was barred from Facebook and Twitter (https://www.nytimes.com/2022/05/10/technology/trump-social-media-ban-timeline.html) (yes, it was still called Twitter then).

Fearing a backlash after his re-election, many big tech companies have "bent the knee" to Trump and agreed to reduce oversight over what can be shared on their platforms. In a video released just days before Trump’s inauguration, Mark Zuckerberg announced the removal of fact checkers (https://www.facebook.com/zuck/videos/1525382954801931/) because there was "too much censorship.”

Zuckerberg is instead following Twitter (or X), and replacing fact checkers with Community Notes (corrections made by the community and placed on the bottom of misleading or factually incorrect posts). However, the effectiveness of this approach is questionable.

A deep dive into the effectiveness of Community Notes was undertaken by Tom Stafford for the London School of Economics and Political Science (https://blogs.lse.ac.uk/impactofsocialsciences/2025/01/14/do-community-notes-work/). It found Community Notes often appear well after the damage has been done or do not appear at all. It also pointed out that the selection of which Community Notes are worthy of sharing is in itself decided by an algorithm and at the whim of the technology company that owns the platform.

What this means is that in the rush to appease Trump the underlying data AIs continue to be trained on is likely to become less moderate and more extreme. As a result, once these large language models have ingested this new content and are asked to generate "the next best word in a sentence," that word is likely to become more extreme as well.

The problem isn’t just that the guardrails are being taken away from what humans can post on social media sites. The guardrails have also been removed from the AI models themselves.

Some of the content filters that limit what type of content these models can generate are being tweaked, allowing them to generate more offensive and radical content.

I know I said that futurists avoid making predictions but all of this points towards a future of more extreme content online and less moderation. It’s likely that we’ve already passed peak online trust, and the decline from this point could be rapid.

A combination of technologies including AI and deep-faked voice and video means we can no longer be sure what is real online.

Although this enshitification of the internet is now happening on the grandest of scales, the silver lining is that what we do in real life, what we do in our communities and the human-to-human connections that many of us are making, well, that’s more important than ever.

Simon Waller is a strategist and futurist who works extensively with the public and for-purpose sectors. He is also the independent board chair for Myli, a not-for-profit library corporation that provides library services across much of Melbourne’s outer east. Simon’s new podcast can be found at thefuturewithfriends.com.

Posted on 11 Nov 2025

I’ve seen what happens when fear of conflict wins out over taking a principled stand.

Posted on 10 Nov 2025

JOEL DEANE on "The future of radical moderation" in Radical Moderate.

Posted on 10 Nov 2025

ROD MARSH on "The future of radical moderation" in Radical Moderate.

Posted on 10 Nov 2025

DR TRISH PRENTICE on "The future of radical moderation" in Radical Moderate.

Posted on 10 Nov 2025

DR SIMON LONGSTAFF AO on "The future of radical moderation" in Radical Moderate.

Posted on 10 Nov 2025

DAN LALOR on "The future of radical moderation" in Radical Moderate.

Posted on 10 Nov 2025

SIMON WALLER on "The future of radical moderation" in Radical Moderate.

Posted on 10 Nov 2025

KATE TORNEY OAM on "Navigating complexity & building resilience" in Radical Moderate.

Posted on 10 Nov 2025

KOS SAMARAS on "Navigating complexity & building resilience" in Radical Moderate.

Posted on 10 Nov 2025

BRETT DE HOEDT on "Advocacy and social change" in Radical Moderate.

Posted on 10 Nov 2025

JEN RILEY on "Navigating complexity & building resilience" in Radical Moderate.

Posted on 10 Nov 2025

CATHERINE BROOKS on "Diversity, inclusion and community engagement" in Radical Moderate.